Hey everyone, I am back with another post about a class that I have just completed COMP 410 Software Engineer Methodology. You may have heard bad things about this class or the prereq of this class COMP 310 at Rice University but let me tell you this is the BEST class that I have ever taken. The amount of things you will learn and experience is directly proportional to the time you put into the class

About the class

COMP 410 is a pure discovery-based learning course designed to give students real-life, hands-on training in a wide variety of software engineering issues that arise in creating large-scale, state-of-the-art software systems. The class forms a small software development “company” that works to deliver a product to a customer. Our customer is SLB (formerly Schlumberger), one of the biggest oilfield service companies so the pressure is high. The unique thing about the class is that we don’t get told what to do or how to do anything. All the tools, framework or choices of design are our decisions. We came into the class knowing nothing about tools like C#, .NET, Azure services, Next.js or TypeScript but we came out feeling more accomplished and confident than ever.

What is our product?

The purpose of the product

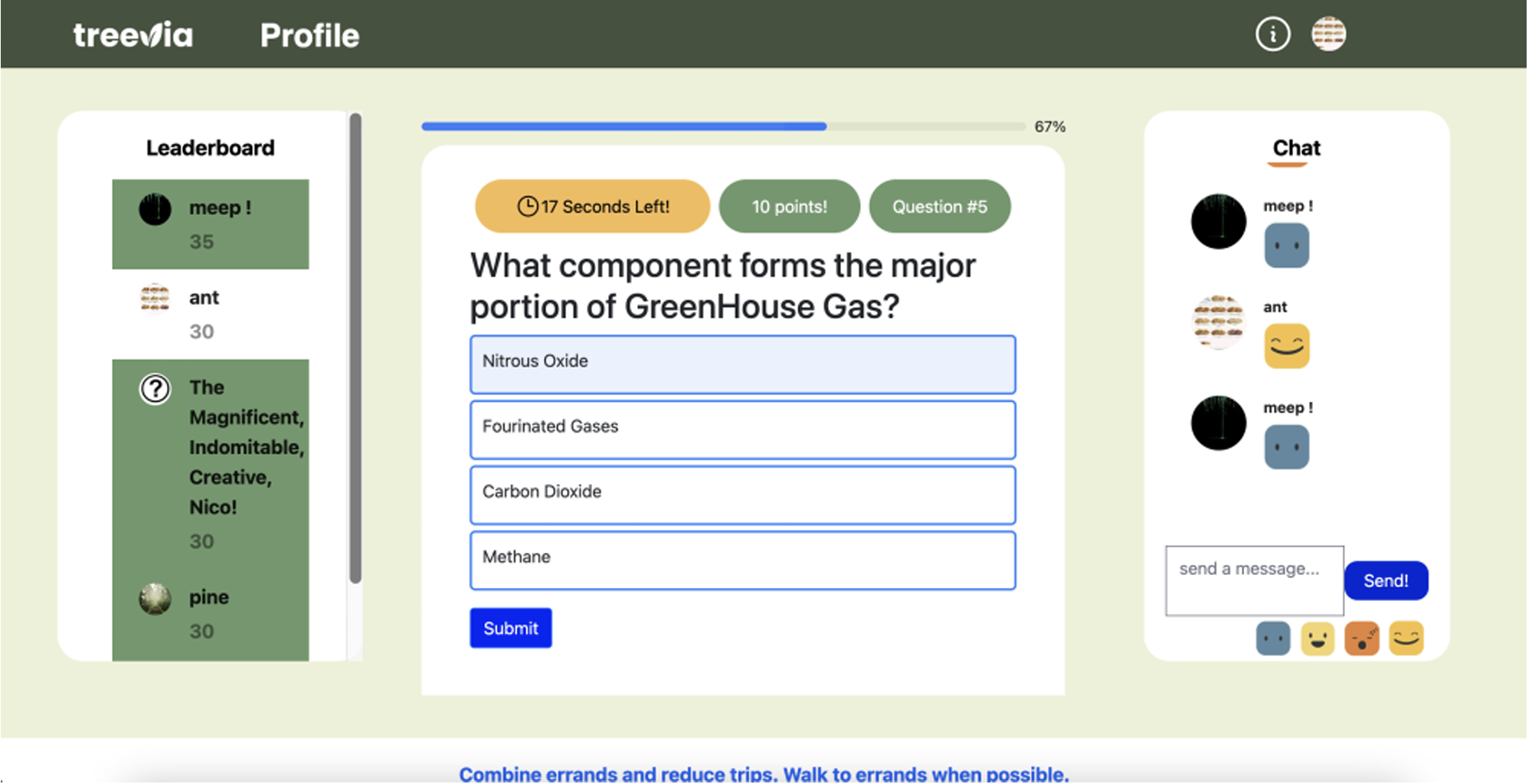

- The Product Treevia is a sustainability awareness trivia game aimed at educating users about sustainability, responsibility, and their impact on the planet.

- Objectives:

- Raise awareness about sustainability: The game focuses on sustainability topics to increase users’ knowledge and understanding.

- Engage users: The gameplay experience is designed to captivate and involve players, making it enjoyable and interactive.

- Inspire repeated gameplay: The game is designed to encourage users to play multiple sessions, fostering continued engagement and learning.

- Support 1-n players per game: The game accommodates both single-player and multiplayer modes, allowing users to play alone or with others.

- Increase marketability of SLB: The Product showcases SLB’s commitment to sustainability, diversity, and innovation, enhancing the company’s reputation and attractiveness.

Detailed breakdown of the application

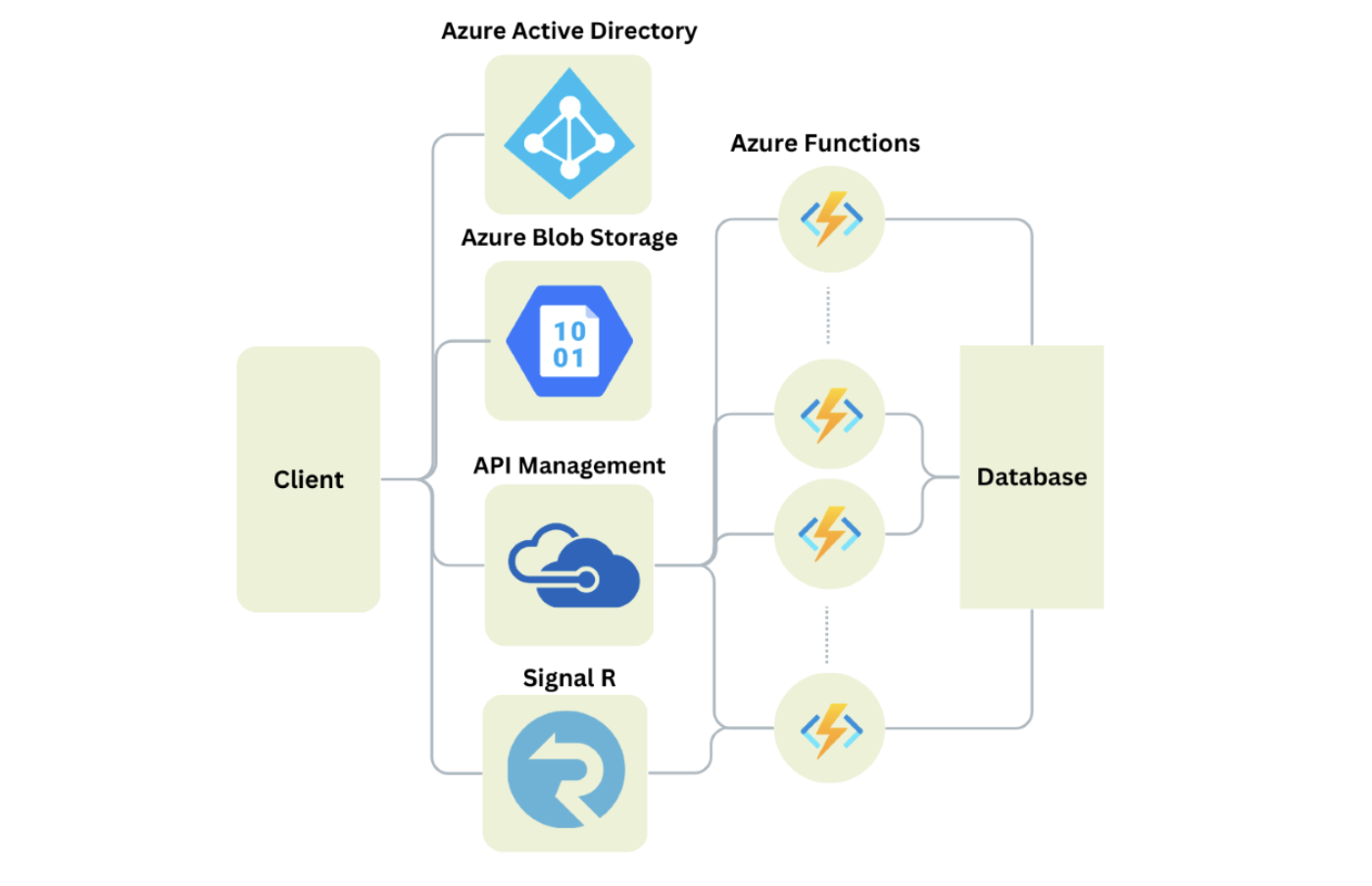

- The application contains 3 parts in general: the browser application, the cloud application and the database part

Browser Application:

- Authentication: Users can log in through Azure Active Directory to access the application and their personalized profiles. They can also log out when they’re finished.

- Player UI: This interface is for users participating in the game as players. It includes various features:

- Main Menu: Users can access game instructions, view their profile, log out, create private games (as the creator), create public games (as the creator), join other players’ private game lobbies using unique codes, and view available public games.

- Game Room: The game room has three stages: Lobby, Play, and Results.

- Lobby Stage: Users can access instructions, send and receive messages, leave the room (with the creator role being passed to another player if the creator leaves), and, as the creator, start the game.

- Play Stage: Users can access instructions, receive questions with a countdown timer, submit responses, receive correct answers, send and receive messages to the entire room, view random fun facts during gameplay, and see the session’s leaderboard.

- Results Stage: Users can view the session’s leaderboard and rankings and choose to return to the lobby or main menu.

- Admin UI: This interface is for admins who have additional privileges and responsibilities within the game. Features include:

- Retrieving existing question types.

- Creating new questions of existing types (slider, single choice, true/false).

- Creating new fun facts.

- Creating new question types.

Cloud Application:

- Authentication: The cloud application handles user authentication, creating corresponding records in the database for new users, and extracting user records (including game statistics and username) for existing users.

- Profile: User statistics, such as points earned, games played, and games won, are updated in the database to track user progress and achievements.

- Upload information: Users with the appropriate role/permissions can create and upload new questions, fun facts, and tags to the database, expanding the game’s content and variety.

- Lobby Creation & Join: The cloud application manages the creation and joining of game lobbies. It creates lobby records in the database, making them available and visible to other users. It also updates the player list for each lobby, ensuring accurate representation and visualization of players.

- Gameplay: The cloud application orchestrates the gameplay experience. It randomly selects a subset of questions from the database for each game session and initiates a game loop. The loop includes the following steps:

- Sequentially presenting questions to users.

- Collecting and saving user responses in the database.

- Retrieving and sending correct answers to all users.

- Updating scores and leaderboards in the database based on user performance.

- Progressing to the next round if the game is not over.

- Determining winners, updating user statistics, and reverting the game back to the lobby if the game concludes.

Database:

- User data storage: The database stores various user-related information, including:

- Basic data such as names, customizable usernames, and app preferences.

- User statistics, such as the number of games played, games won, and total points earned. This information allows for tracking and analyzing user progress and performance.

- Game data storage: The database holds essential data related to the game itself, including:

- Questions and their corresponding answers.

- Grading and submission information for different question types, facilitating accurate evaluation of user responses.

- Live game sessions and their associated details, such as the participating users and the questions used in the session. This data allows for maintaining the integrity of ongoing games and tracking session-specific information.

- Statistical data storage: The database captures statistical information to provide insights into the overall usage and performance of the Product, including:

- Total number of games created, indicating the popularity and engagement of the game.

- Total number of user accounts created, reflecting the user base and growth.

- Total number of user accounts deleted, providing insights into user retention.

- Average number of questions and users per game, offering a measure of game complexity and player engagement.

- Average grade for each question, giving an indication of question difficulty and user performance.

The database serves as a central repository for storing and maintaining the system data required throughout the lifetime of the Product. It enables efficient data retrieval and updates to support seamless gameplay, user profiles, and game statistics.

How’s is it like working with a professional client

Working with a professional client like SLB for our group project was an incredibly challenging yet rewarding experience. The client’s professional nature brought a sense of urgency and accountability to the project. Here are some key parts of our collaboration

- Deadlines and Progress Meetings: SLB, as a professional client, emphasized the importance of meeting deadlines. We had regular progress meetings every two weeks to discuss our project’s advancement and address any challenges or concerns. These meetings provided an opportunity for us to align our work with the client’s expectations and ensure steady progress.

- Client Objectives: SLB had clear objectives and desired outcomes for the project. They wanted to see tangible results aligned with their sustainability initiatives. This meant that we needed to understand their requirements thoroughly and ensure our work met their expectations.

- Evolving Plans: Initially, SLB had plans for an MVP (Minimum Viable Product) within the first month. However, beyond that, we were given the autonomy to set our own goals and decide on the features we wanted to add. This allowed us to take ownership of the project and prioritize the implementation of additional functionalities based on our vision and user feedback.

- Critiques and Clarity: SLB provided us with frequent feedback and critiques throughout the project. Sometimes, they themselves were unsure about their exact requirements, which posed a challenge. However, their critiques pushed us to refine our work continuously and strive for excellence. It required us to communicate effectively and seek clarification to ensure we were aligned with their expectations.

- Professional Guidance: Throughout the project, SLB offered invaluable professional guidance. They shared their expertise on project planning, work structuring, inter-team communication, and what it’s like to work in a professional environment. This guidance enabled us to learn and develop essential skills, preparing us for future roles in the industry.

- Personal and Skill Development: Working with a professional client like SLB allowed us to grow both personally and professionally. Their high expectations and emphasis on performance pushed us to excel. We developed our skills in project management, communication, collaboration, and adaptability, preparing us for real-world job environments.

In conclusion, while working with a professional client like SLB presented its challenges, the experience was invaluable. It provided us with the opportunity to work closely with a real client, navigate complex requirements, and deliver a product that met their objectives. The guidance and critiques offered by SLB not only enhanced our project but also helped us grow individually and prepare for future professional endeavors.

My evangelist roles

- What is an evangelist role?

- Evangelists are people whose charge is to oversee and if necessary direct various “cross-cutting” project concerns that affect many different aspects of the development. In such, the analysis of the project by the evangelists are extremely important to the customer because they give a view of the state of the project independent of its internal management breakdown.

- We are expected to keep a wiki page (linked below) that tracks the past, present and future directions of their topic with regards to the project, make a detailed report available on weekly progress for the customer, and participate in extra meeting hours. As an evangelist, we meet up 2 extra 1 hour meeting per week aside from 3 class meetings during class time.

- My evangelist role is **Source Control/Testing and Deployment Evangelists.** This sounds like a lot of work and it was. This is a combination of 3 roles: source control + testing + deployment. These are the 3 critical jobs of a DevOps Engineer and this was my first time doing these work in a professional environment

- I am responsible for managing the project’s source control repository, designing a system for developing and interacting with it, and making sure that people follow the system. For example, this includes the process for merging, continuous integration/continuous development, branch standardization, naming conventions, etc. If someone has a question about the status of our repository, I should be able to answer it.

- I am responsible for understanding what the testing requirements are for the project, how the system architecture and implementation address those requirements and how the development process is achieving those goals. This includes small scale unit testing and large-scale systems testing, metrics of those tests and how those tests relate to proving that the system is or is not meeting its intended goals. Responsible for understanding the techniques used for, the current status of and future plans for the deployment of the system across all targeted platforms.

What I did?

Backend Developer

- My main role in the project was being a backend developer for the project. For the tech stack, we mainly used C#, ASP.NET, serverless Azure Functions in the backend. I worked mostly in the Cloud System including writing Azure Functions and make sure that the frontend can use the Azure Functions as HTTP requests. I also worked on the database system including the CosmosDB MongoDB API

- A little bit about why using serverless Azure Functions?

- Each function handles separate backend logic corresponding to an HTTP endpoint that can be triggered by a request from the client. Each function is deployed and run independently and concurrently (microservice architecture), allowing the system to be resilient to failures.

- Interacts with the database to read/write data relevant to each function

- Interacts with SignalR to broadcast messages to connected clients when necessary

- For the database part, I took part in designing the database system, including the database structure and the details and types of the collections. This really feels like a system design question. I wrote a lot of code utilizing the MongoDB API to efficiently query and mutate the data from the database and help the database can interact with the cloud application

- A little bit about why using serverless Azure Functions?

- Below is the overview of the architecture of our project

Why the system is scalable and extensible?

- The application architecture’s main selling point lies in the flexibility it offers for the question architecture, enabling the incorporation of multiple question types and grading strategies. Questions are divided into five components: display format, display code, question info, submission format, and grading strategy. The display format specifies how question information is formatted and passed to the front end for rendering using the display code, which is stored as an HTML document in blob storage. The submission format determines how information is sent to the backend for grading using a grading strategy. Together, the submission format and display format form a UI strategy, which includes a pointer to the front-end code responsible for rendering the information.

- This approach decouples the question information from its display and scoring mechanisms. In theory, this allows for the addition of more complex question types, such as point-and-click map questions or even entire mini-games, with minimal modifications required, primarily involving class definitions, grading strategies, and front-end code.

- The game is designed for easy scalability and extensibility through the utilization of Azure functions. Each function can be scaled independently by simply adding them through regular repository pull requests. Other game components, such as the database and SignalR, can also be scaled based on performance requirements. During testing, the database was identified as the bottleneck due to the high volume of request units needed to run the game.

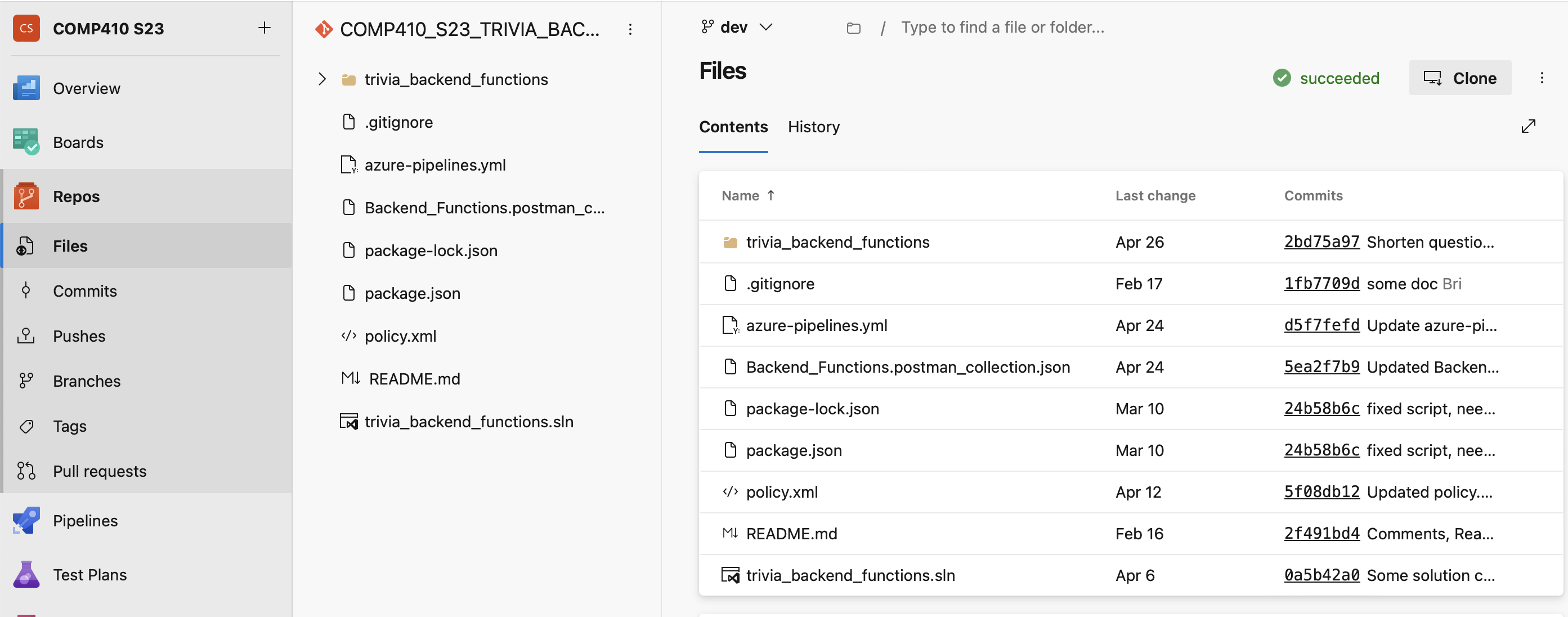

Source Control

- For Source Control, I am responsible for managing branches and ensuring proper branch name standardization in Azure DevOps. I configure and set up Azure DevOps as our source control system, enabling effective version control and collaboration within the development team. I integrate Azure DevOps pipeline with our source control system, automating build, test, and deployment processes to ensure rapid and reliable software delivery.

- I facilitate code reviews, enforce coding standards, and utilize code analysis tools within Azure DevOps to maintain code quality and adherence to best practices. I handle versioning and tagging processes in Azure DevOps, labeling releases and critical code changes for easy tracking and rollback if needed.

- In addition, I optimize the performance of Azure DevOps, ensuring efficient repository management, cleanup, and implementing productivity-enhancing tools and techniques. I promote collaboration within the team by setting up workflows, facilitating code sharing, and documenting best practices for effective usage of Azure DevOps.

Overall, as a source control evangelist, my role revolves around effectively utilizing Azure DevOps for source control, pipeline integration, code review, versioning, and collaboration to streamline our software development and delivery processes.

Deployment

Using Azure CLI, Python, and Java, I created a deployment script that simplifies the process and automates the setup of essential services. In this blog post, I will share the details of this deployment work, highlighting the key steps involved and the benefits it brings.

Creating the Deployment Script:

To enhance the deployment experience, I crafted a user-friendly deployment script using Python. This script seamlessly interacts with Azure CLI and empowers users to configure critical details, such as service names and settings. By storing these prompts in the adaptable config.py file, customization for different environments becomes effortless and straightforward.

Deploying Essential Services:

The deployment script takes care of provisioning the core services that drive the application’s functionality. These services encompass:

- Static Web App: The script automates the deployment of the application’s front-end as a static web app, ensuring easy access for end-users.

- Azure CosmosDB: To guarantee efficient data storage and retrieval, the script sets up Azure CosmosDB, a robust and scalable database solution.

- API Management: By seamlessly integrating with API Management, the deployment script simplifies the management and consumption of APIs, fostering a seamless user experience.

- Function App: The script orchestrates the deployment of the Function App, enabling the execution of serverless functions tailored to specific application requirements.

- SignalR: Real-time communication and updates between the application and users are made possible through the inclusion of SignalR, facilitating dynamic and interactive user experiences.

- Blob Storage Account: For secure and scalable data storage, the script provisions a Blob Storage Account, catering to various data storage needs within the application.

Integration with Database Population Script:

To optimize efficiency, I integrated the deployment script with a Java script responsible for populating the application’s databases. This integration automates the database population process, saving valuable time and effort during deployment.

By developing an efficient deployment script and seamlessly integrating it with a database population script, I have established a streamlined workflow for deploying applications on Azure Cloud Services. This process simplifies the setup of essential services, offers customization options through user prompts, and automates database population. Leveraging the power of Azure CLI, Python, and Java, this deployment workflow ensures a seamless and successful deployment experience for your project.

Testing

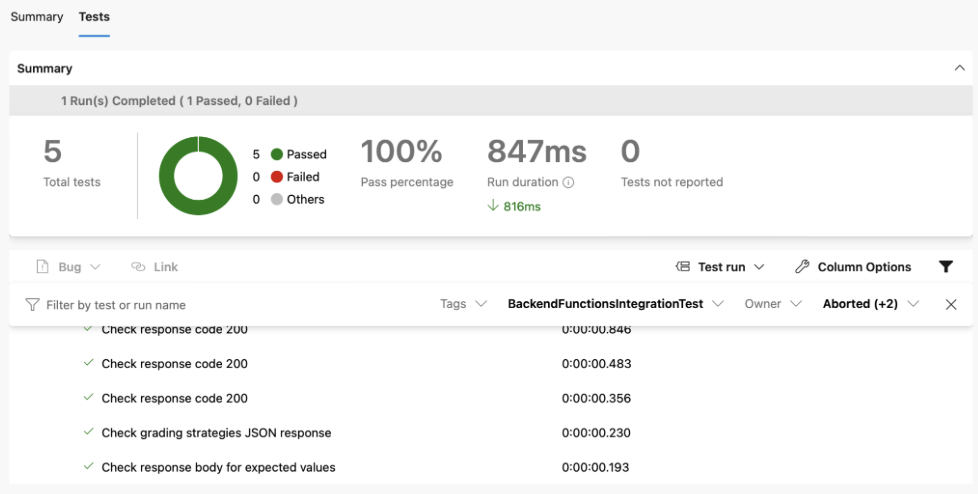

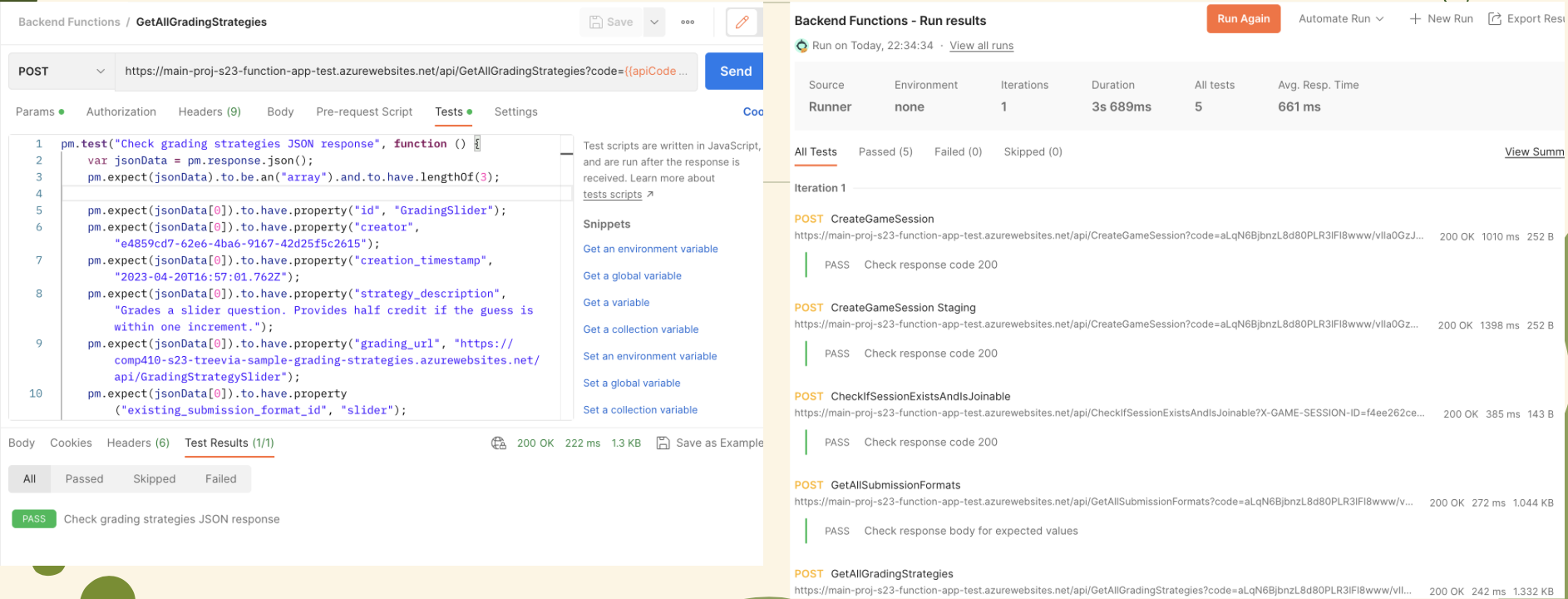

In our testing process, we have implemented a streamlined workflow that combines Postman collection export and DevOps integration. This approach enables us to efficiently test our APIs, ensuring their functionality remains intact during code changes and pull request merging.

- Postman Collection Export:

We start by exporting the relevant Postman collections that contain the APIs we need to test. This process simplifies packaging the collections for easy integration with our testing framework. - Unit Testing Creation:

Additionally, we create unit tests to validate the individual components and functions of our application. Unit tests help ensure that each isolated part functions correctly and meets the desired requirements. - DevOps Integration:

To automate our testing process, we integrate the exported Postman collections and unit tests into our DevOps pipeline. We create a Postman Newman task within the pipeline, which executes the APIs present in the collections and runs the unit tests. This integration enables automatic testing whenever a pull request is merged or code is pushed to the branch. - Ensuring API Functionality:

By incorporating Postman collections and unit tests into our DevOps pipeline, we can thoroughly test our APIs to confirm their continued functionality. This approach helps identify any issues or regressions early in the development cycle, enabling prompt resolution and maintaining the overall quality of our application.

Benefits of the Workflow:

- Automation: Automating the testing process saves time and effort, allowing us to focus on other crucial tasks.

- Consistency: Utilizing the same Postman collections and unit tests ensures consistent and repeatable testing across different code changes and branches.

- Early Issue Detection: Testing APIs and running unit tests during development helps identify and address issues at an early stage, reducing the risk of critical issues reaching production.

- Collaborative Testing: Integrating testing into the larger DevOps workflow promotes collaboration and facilitates efficient bug resolution.

Our streamlined testing workflow, combining Postman collection export, unit testing creation, and DevOps integration, empowers us to test our APIs effectively. By automating the execution of Postman collections and unit tests within our pipeline, we can ensure the continued functionality of our APIs throughout the development cycle. This approach improves testing efficiency, maintains consistent results, and helps us deliver a high-quality and reliable application.

Some thoughts on why this is the best class I have taken?

I feel that I have experienced tremendous personal growth in terms of collaborating within a large team and honing my software development skills. Throughout the class, I gained valuable hands-on experience working with various aspects of the application. Initially, I had little knowledge about the technologies and services involved, such as C#, .NET, and Azure Services. Even the responsibilities of my evangelist roles, including Source Control, Testing, and Deployment, were entirely new to me. However, I immersed myself in learning through practical experience during the class. I am now confident in my ability to effectively manage Source Control on Azure DevOps and Github, establish efficient testing frameworks with Postman integration, and create complete, end-to-end, automated deployment scripts for deploying all system services.

The skills I acquired in COMP410 have inspired me to embark on more personal projects. I no longer shy away from source control, testing, deployment, or backend work, both in my personal projects and future internships. I have become more willing to take risks and tackle tasks outside of my comfort zone. Additionally, I now understand the crucial importance of documentation alongside coding. Proper documentation can significantly help teammates who are picking up tasks, potentially resolving up to 70% of the issues they may encounter.

Moving forward, I have learned a vital lesson from this class: I will never proceed without setting up a testing framework. This was a challenging lesson, as we left testing until the end of the class, causing numerous issues with our pipeline build along the way. Moreover, I have realized the significance of starting projects promptly. Brainstorming ideas, designing the system, and creating essential diagrams such as use-case and system blocks require ample time and attention to detail. I now appreciate the importance of initiating projects early to allow for thorough planning and implementation. This is definitely the best class that I have taken at Rice University.

If you like this blog or find it useful for you, you are welcome to leave a comment. You are also welcome to share this blog, so that more people can participate in it. If the images used in the blog infringe your copyright, please contact the author to delete them. Thank you so much!